AMD Corporation has created a new graphics processor designed specifically for AI systems and large language models. Unlike the competing NVIDIA product, it is capable of working with more impressive amounts of memory.

MI300X

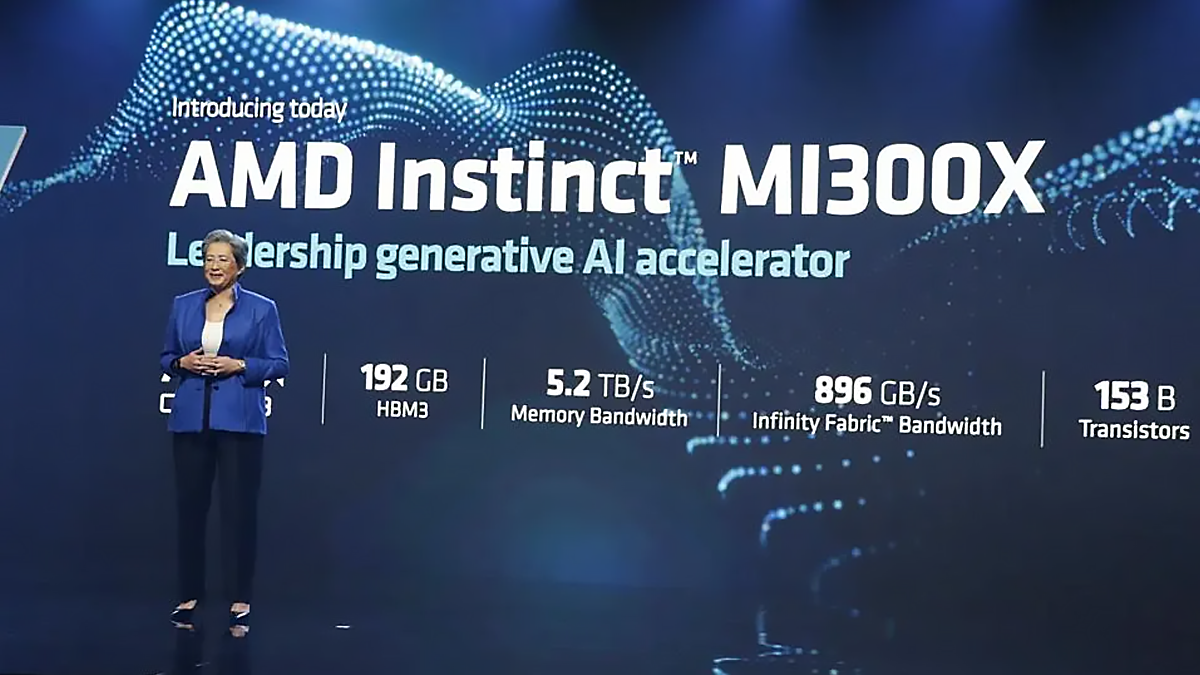

AMD Corporation has unveiled the new MI300X graphics processor. Although the processor is positioned as a GPU (graphic processing unit), but its main purpose is to compete with the developments of NVIDIA in the field of high-performance artificial intelligence (AI) systems maintenance.

NVIDIA currently has a market share of about 80%, and its processors are used in systems that power AI systems such as ChatGPT, Bing AI and Stable Diffusion.

GPUs in such systems are preferred because of the possibility to parallel a large number of relatively simple computational operations, which are the basis of neural network operation and training.

AMD CEO Lisa Su said that "the market for data center AI accelerators will increase by 5x to over $150 billion" which was likely the main driver for the new solution.

A side effect of AMD's new processor coming to market could be to stimulate growth in the audience for AI products due to a competitive reduction in the price of operating their infrastructure.

До 192 ГБ HBM3

The MI300X processor was designed specifically for use with advanced AI products and therefore supports up to 192GB of high-speed HBM3 memory, which means it can handle larger models than the competition.

In turn, apart from parallel processing of computational operations, the volume of processed memory itself is critical for AI systems. To understand this point, we can mention that in the demonstration, arranged by AMD, MI300X processor processed Falcon model, which contained 40 billion parameters, while OpenAI GPT-3 model contains 175 billion parameters.

The software shell

In addition to the above, AMD also introduced its own software shell for AI processors called ROCm.

Some media have already dubbed it a competitor to the popular CUDA product from NVIDIA, designed to manage graphics processors.

Conclusion

The development of AI continues by leaps and bounds, and its pace is unlikely to slow down in the near future, which contributes to both interest among users and reaction from manufacturers.

And we in turn will hope that in the end, this development will have a positive impact on the general rules and regulations of the IT infrastructure, and we will try to comply with the joy and development of our customers! 😉

Comments